There’s no turning back now when it comes to generative AI fundamentally altering how we trust images. This technology has already disrupted our perception of photos, and there’s no undoing that. However, it’s crucial for the tech industry to prioritize transparency about when and how these AI tools are being used. In response to this need, Google has announced that, starting next week, its Google Photos service will indicate when an image has been edited with the help of artificial intelligence (AI).

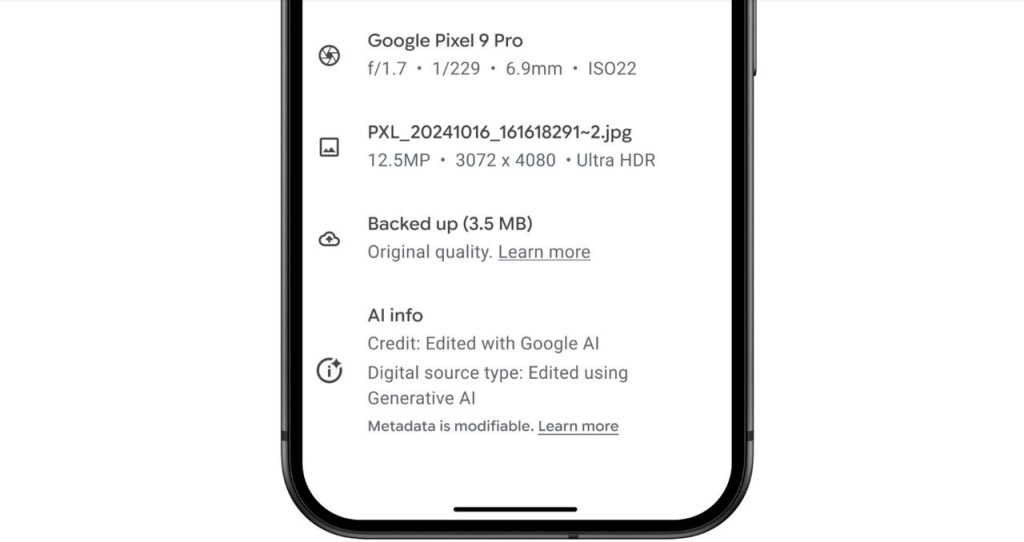

John Fisher, engineering director for Google Photos, shared this update in a recent blog post. According to Fisher, “Photos edited with tools like Magic Editor, Magic Eraser, and Zoom Enhance already include metadata based on technical standards from The International Press Telecommunications Council (IPTC) to indicate that they’ve been edited using generative AI.” He further explained that Google is now making this information more visible, alongside other image details like file name, location, and backup status within the Google Photos app.

The newly added “AI info” section will allow users to view these AI editing details more easily, both on the web and in the mobile app version of Google Photos. This step forward in transparency will help users understand when an image has been manipulated using AI-powered tools, adding a layer of accountability to the process.

These labels won’t be limited only to AI-generated changes. Google will also clarify when a photo incorporates elements from multiple images, such as features like Pixel’s Best Take and Add Me. These features allow users to combine elements from different photos to create a composite image, and Google’s labeling will make this clear. The move is encouraging, as it shows an effort to ensure users understand what they are looking at, even if the editing involved tools beyond just generative AI.

However, it’s important to note that this metadata isn’t foolproof. People who intentionally want to hide the fact that AI was involved in creating or modifying an image can find ways to bypass these labels. Fisher acknowledged this limitation, stating that Google will continue working on improving transparency in this area by gathering feedback and evaluating other solutions.

For now, the metadata attached to Google’s AI tools has been relatively invisible to everyday users. The absence of a clear “this was made with AI” label in Google Photos has been a concern, particularly with the growing use of tools like Magic Editor’s Reimagine feature. This feature allows users to add objects generated by AI into images, even when those objects were never part of the original scene. Both Google and Samsung offer similar AI tools that can drastically alter an image’s content. In contrast, Apple, which plans to introduce its own AI image generation features in the upcoming iOS 18.2 release, has taken a different stance. Apple’s Craig Federighi stated that the company is intentionally avoiding generating photorealistic content, expressing concern over how AI could blur the lines between real and fabricated images. Apple is clearly aware of the potential for AI to cast doubt on whether photos are accurate reflections of reality, and they are treading carefully in this space.

Photo manipulation is not a new concept, as image retouching has been around for decades. However, the current generation of AI tools has made it easier than ever to create realistic, yet entirely fabricated images with minimal effort or skill. What used to require a professional graphic designer can now be done with just a few taps or clicks using AI-powered apps. This rapid advancement has left many people questioning how they can trust what they see in photos, especially when these AI edits can be so subtle or convincing that they are almost indistinguishable from reality.

The question of trust in photos is not a trivial one, as photos have long been a medium we rely on for capturing and preserving memories, documenting events, and even providing evidence. When AI blurs the line between what’s real and what’s digitally fabricated, it can erode our confidence in the authenticity of images, making it difficult to know what’s real.

Google’s efforts to make AI-generated edits more transparent are a step in the right direction, but it’s clear that this is just the beginning. As AI technology continues to evolve, the industry will need to stay ahead of the curve in terms of transparency and accountability. For now, the introduction of AI labels in Google Photos provides users with more information and allows them to make informed judgments about the images they see. But it also serves as a reminder that with great technological power comes the responsibility to use it ethically and transparently. As companies like Google, Apple, and others continue to innovate in the AI space, the challenge of maintaining trust in digital content will remain an ongoing conversation.