Last month, Apple introduced its Apple Intelligence features through the release of the iOS 18.1 developer beta. One notable addition is the Writing Tools, which allows users to reformat or rewrite text using Apple’s AI models. However, users are informed that AI-generated suggestions may not always be of the highest quality for certain topics.

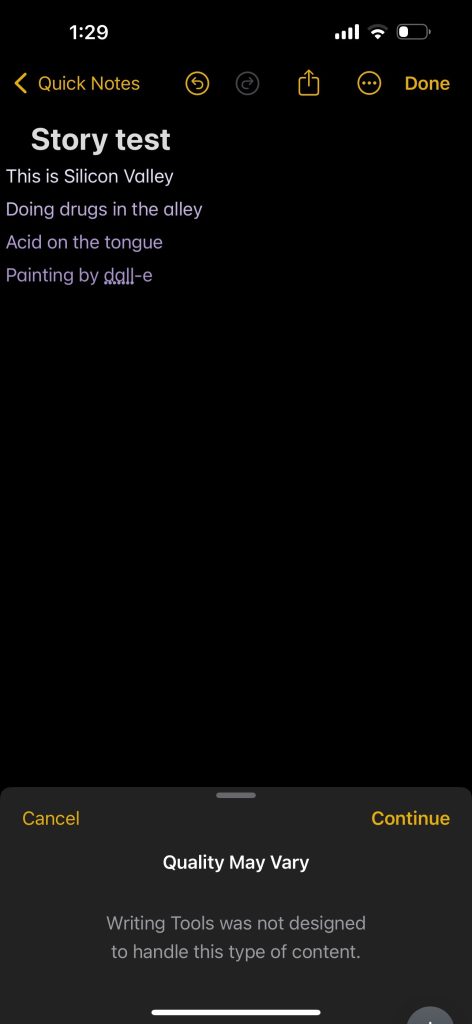

Apple Intelligence can be accessed throughout the system whenever you need to adjust text. Yet, when attempting to rewrite content containing swear words like “s—” or “bastard,” users will receive a warning stating, “Writing Tools was not designed to handle this type of content,” accompanied by a header cautioning that the quality of rewriting may vary.

This warning is not limited to swear words; references to drugs, killing, or murder will also trigger it.

Despite the warnings, Apple Intelligence still provides sentence suggestions even when encountering untrained words or phrases. In one instance during testing, replacing “sh—y” with “crappy” removed the warning, but the AI tool returned the same suggestion as before.

We have requested more information from Apple regarding the topics for which the writing tools are not trained to provide suggestions. We will update this story if we receive a response.

Apple likely aims to avoid controversy by restricting the AI from handling certain words, topics, and tones when rewriting sentences. The Apple Intelligence-powered writing tools are not designed to create new content from scratch. However, Apple wants to alert users when the AI encounters these terms.

It took Apple years to allow the keyboard’s autocorrect to suggest swear words. With iOS 17 last year, Apple finally introduced an autocorrect feature that learns your swears. With Apple Intelligence, the company is likely being cautious to avoid regulatory issues related to generating problematic content.