Anthropic has announced significant upgrades to its AI lineup, introducing an enhanced Claude 3.5 Sonnet model, the Claude 3.5 Haiku model, and a new “computer control” feature currently in public beta.

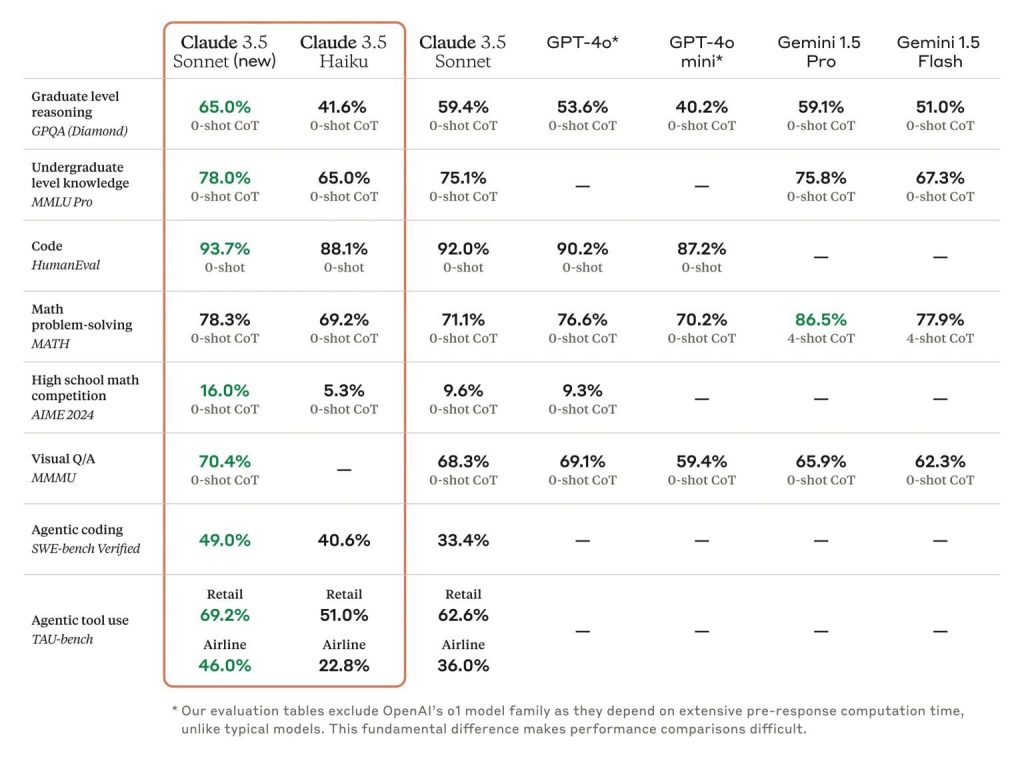

The updated Claude 3.5 Sonnet model boasts impressive improvements across multiple performance metrics, with a standout boost in coding skills. It achieved an exceptional 49.0% score on the SWE-bench Verified benchmark, outperforming all publicly available models, including those from OpenAI and specialized coding systems.

Anthropic has also introduced an innovative computer control feature, enabling Claude to interact with computers in a human-like manner. This feature allows the model to view screens, move cursors, click, and type, marking a first in AI capabilities of this kind. Presently available in public beta, this functionality positions Claude 3.5 Sonnet as the pioneering AI model to support such interaction.

Leading tech companies have already started integrating these new features into their systems.

“The upgraded Claude 3.5 Sonnet represents a big step forward in AI-driven coding,” noted GitLab, which observed a 10% increase in reasoning capabilities across various applications with no added latency.

The Claude 3.5 Haiku model, scheduled for release later this month, matches the performance of its predecessor, Claude 3 Opus, but with added speed and cost-efficiency. It scored 40.6% on the SWE-bench Verified benchmark, surpassing competitive models such as the original Claude 3.5 Sonnet and GPT-4o.

Regarding computer control capabilities, Anthropic has approached this advancement carefully, acknowledging current limitations while underscoring its potential. On the OSWorld benchmark, designed to evaluate a model’s ability to navigate computer interfaces, Claude 3.5 Sonnet achieved a 14.9% score in screenshot-only tests, nearly doubling the next-best system’s score of 7.8%.

Anthropic has ensured thorough safety assessments for these developments, conducting pre-launch tests in collaboration with both U.S. and UK AI Safety Institutes. They affirm that their ASL-2 Standard, as outlined in their Responsible Scaling Policy, remains a suitable framework for these models.