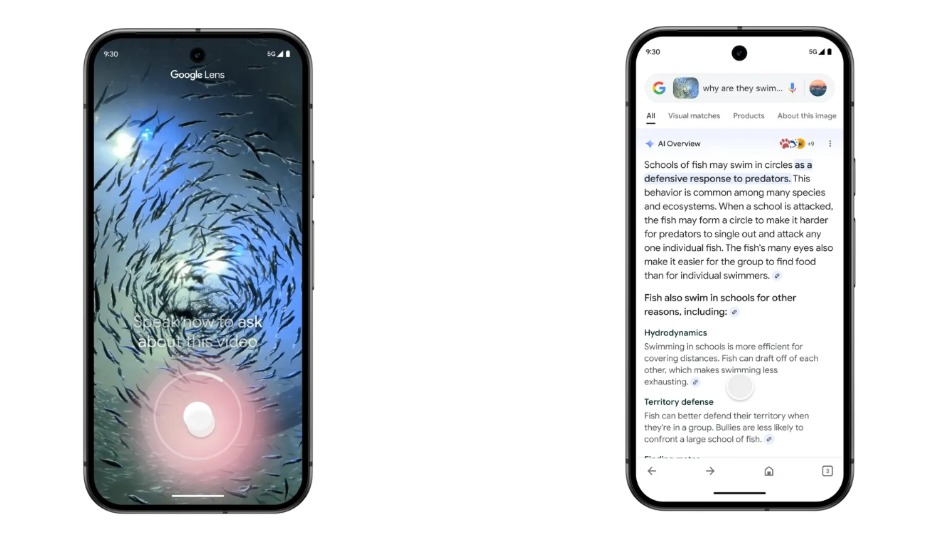

Google Lens now offers a video search feature, allowing users to record a video and ask questions about what they’re seeing. This new functionality goes beyond using a single picture, enabling users to gain insights into what’s happening in a dynamic scene. The feature, accessible through Search Labs on Android and iOS starting today, provides both an AI-generated overview and related search results based on the video content and the question asked.

Google first teased this video-based search capability at its I/O event in May. For instance, a visitor at an aquarium curious about fish behavior could use Google Lens to capture a video of the exhibit. By holding down the shutter button, they can record the scene and ask questions aloud, like “Why are they swimming together?” Once recorded, Lens utilizes Google’s Gemini AI model to analyze the video and provide a relevant answer, creating a richer interactive search experience.

Explaining the technology behind this feature, Rajan Patel, Google’s vice president of engineering, told The Verge that Google captures video as a sequence of image frames, which are then analyzed using existing computer vision techniques from Google Lens. But what sets this apart is Google’s application of a “custom” Gemini AI model, specifically designed to understand multiple frames in sequence. This allows it to process the video’s content and generate a response grounded in web-sourced information.

Currently, Google Lens doesn’t support sound recognition within videos, meaning it can’t identify sounds like bird calls if you’re capturing audio. However, Patel noted that Google is experimenting with this capability, indicating that sound identification may be added to Lens in the future.

In addition to the video search feature, Google Lens has also enhanced its photo search by incorporating voice questions. Previously, after snapping a photo, users could only type their questions into Lens. Now, they can simply hold down the shutter button and ask a question aloud while pointing the camera at their subject. This new voice-activated question feature is rolling out globally on Android and iOS, though it currently supports English only.

These recent updates to Google Lens reflect Google’s commitment to making search more accessible and intuitive by using AI-driven tools to improve real-time understanding of visual and audio information. Through features like video search, voice-activated queries, and continuous development in AI models, Google Lens is enhancing how users interact with the world, providing them with quick, contextually relevant answers as they explore their surroundings.